Network Visualization (PyTorch)

- 在这部分用了一个已经在ImageNet上面pretrain过的CNN

- 用这个CNN来定义一个loss function,然后用这个loss来测量现在的不高兴程度

- back的时候计算这个loss对于每个像素的gradient

- 保持这个model不变,但是在图片上面展示出来gradients的下降,形成让loss最小的图片

这个作业一共分成三个部分:

- saliency map:一个比较快的方法来展示这个图片哪个部分影响了net分类的决定

- fooling image:扰乱一个图片,让他看起来跟人似的,但是会被误分类

- class visualization:形成可以得到最大分类得分的图片

注意这里需要先激活conda,不然在jupter里面torch会报错

事先处理

- 事先定义了函数preprocess的部分,因为pretrain的时候也是提前进行好了预处理

- 需要下载下来预处理的模型,这里用的是SqueezeNet,因为这样可以直接在CPU上面形成图片

- 读取一部分ImageNet里面的图片看一看是什么样子的

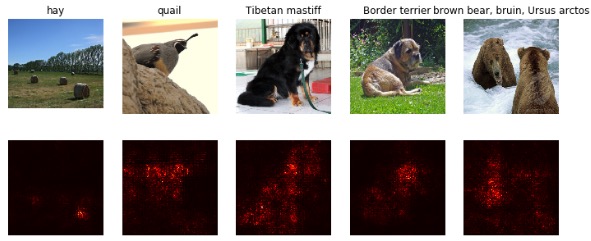

saliency maps

- saliency告诉我们每个pixel对分类得分的影响

- 为了计算这个东西,我们需要计算没有正则化之前的score对于正确分类的gradient(具体到每个pixel)

- 比如图片的大小是3xHxW,那么得到的gradient的形状也应该是3xHxW

- 表示的就是这个pixel改变的话对于整个结果改变的影响

- 为了计算,我们取每个gradient的绝对值,然后取三个channel里面的最大值,最后得到的大小是HxW

gather method

- 就像在assignment1里面选择一个矩阵里面的最大值一样,gather这个方法就是在

s.gather(1, y.view(-1, 1)).squeeze()一个N,C的矩阵s里面选择对应的y那个的值然后形成一个行的数组

compute_saliency_map

- 输入:

- X:输入的图片 (N,3,H,W)

- y:label (N,)

- model:预训练好的模型

- 输出:

- saliency,大小是(N,H,W)

- 注意,因为torch这个对象自己本来就已经带着grad了,所以直接求出来就可以了,但是注意需要定义一下backward之后的大小应该是多少

1 | def compute_saliency_maps(X, y, model): |

fooling images

- 可以生成fooling image,给一个image和一个目标class,我们让gradient一直升高,去让目标的score最大,一直到最后的分类是目标的分类

- 输入

- X (1,3,224,224)

- target_y 在0-1000的范围里面

- model 预训练的CNN

- 输出:

- x_fooling

- TODO

- When computing an update step, first normalize the gradient:

# dX = learning_rate * g / ||g||_2 - 需要自己写一个训练的部分

- When computing an update step, first normalize the gradient:

1 | def make_fooling_image(X, target_y, model): |

class visualization

- 从一个随机的noise开始然后往目标的class上面增加gradient

1 | def create_class_visualization(target_y, model, dtype, **kwargs): |