target

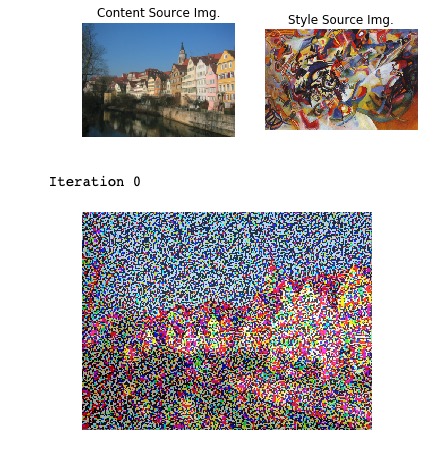

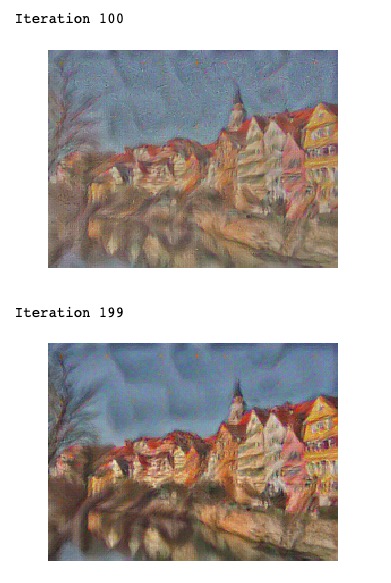

- 现在有两张图片,需要产生一些新的图片是一张图片的内容但是是另一张图片的style

- 首先我们希望可以构建一个loss function,可以连接style和每个不同的image,然后在每个图片的pixel上面降低gradient

- 在这个里面用squeezeNet(在ImageNet上面pretrain的)来提取图片的feature

预先设定好的函数

- 因为在这部分直接处理的是jpeg的图片而不是cifar-10的图片了,所以在这部分需要对出片进行预处理

- 同时需要设定一个dtype = torch.FloatTensor 来设计是用CPU跑还是用GPU跑(GPU的里面会带cuda)

CNN = torchvision.models.squeezenet1_1(pretrained=True).features提取squeezenet的model,并且设定CNN的type等于上面设定好的dtype- 因为不需要再进行训练了,需要把cnn里面的所有自动计算grad的功能关掉

提取特征

- 输入

- x,一个tensor,大小是(N,C,H,W),里面是一个minibatch的数据

- cnn,刚才载入好的model

- 输出

- features,一个list,features[i]的大小是(N,C_i,H_i,W_i)

- 在不同层得到的feature会有不同的channel的数量以及H和W的大小

- features,一个list,features[i]的大小是(N,C_i,H_i,W_i)

- 实现:

- 在具体的代码实现里面,直接用value得到每一层之后的结果,下一层的输入就是上一层得到的结果

1

2

3

4

5

6

7

8

9def extract_features(x, cnn):

features = []

prev_feat = x

for i, module in enumerate(cnn._modules.values()):

next_feat = module(prev_feat)

features.append(next_feat)

prev_feat = next_feat

return features

- 在具体的代码实现里面,直接用value得到每一层之后的结果,下一层的输入就是上一层得到的结果

计算loss

loss一共由三个部分组成,分别是:图片content的loss + style的loss + total var loss

- 我们这个东西的目的是用一张图片的内容和另一个图片的style

- 当内容偏离了content图片的content,style偏离了stype图片的时候就需要penalize(处罚)

- 为了实现这个功能,我们需要用hybrid的loss,并且不是在weights上面调参,而是在每张图片的pixel上面调整

content loss

- 这个函数衡量生成的图片的feature map和原来作为content的图片偏离多少

- 我们只关心这个network里面的一层的表示,这一层会有自己特定的channel数量以及filter的大小

- 我们需要把这个feature map reshape,把所有的空间位置组合到同一个维度上面

- 但是在实际的实现上面,我们不需要再reshape了,因为大小可以直接对应处理了

1 | def content_loss(content_weight, content_current, content_original): |

style loss

对于一个给定的层layer,定义loss

- 计算Gram Mat,G,表示不同filter的相关性。这个矩阵是个协方差矩阵,我们希望形成的图片的activation 统计和style图片的可以match,计算这两个的协方差就是一个办法(并且经过验证效果比较好)

- 给定一个feature map,G矩阵的形状应该是(Cl,Cl)。Cl是这一层的filter的数量。里面的元素应该等于两个filter的乘积

- 把生成图片的G和style图片的G做差,平方和就是一层的loss

所有层的loss加在一起就是总共的loss

G Mat implement

- view(),形成一个内容相同但是大小不同的tensor

- .matmul 两个tensor相乘

- .permute 给tensor里面的维度换位

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31def gram_matrix(features, normalize=True):

"""

Compute the Gram matrix from features.

Inputs:

- features: PyTorch Tensor of shape (N, C, H, W) giving features for

a batch of N images.

- normalize: optional, whether to normalize the Gram matrix

If True, divide the Gram matrix by the number of neurons (H * W * C)

Returns:

- gram: PyTorch Tensor of shape (N, C, C) giving the

(optionally normalized) Gram matrices for the N input images.

"""

# *****START OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****

N,C,H,W = features.size()

# N,C,M

features = features.view(N,C,H*W)

# N,C,M x N,M,C -> N,C,C

gram = features.matmul(features.permute(0,2,1))

if normalize==True:

gram /= (H*W*C)

return gram

# *****END OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****

loss implement

- 输入

- feats:现在图片的每一层的feature,从上面的提取特征函数得到

- style_layers:indices

- style_targets:和上面的长度相同,计算的是第i层原图片得到的G Mat

- style_weights:scalar

- 在计算的时候只需要考虑每一层里面计算出来的现在的G Mat(注意索引不是i)和原图片的G,和上面一样的计算就可以了

- 输入

1 | # Now put it together in the style_loss function... |

total-variation reg

- 为了让图片显示的内容更加平滑,加入了这个惩罚部分

- 计算的方法可以是计算每个像素和它相邻像素的差的平方和(相邻像素分别包括垂直和水平)

- 需要让结果vec化,直接用-1把矩阵错位一个

1 | def tv_loss(img, tv_weight): |

已经写好了转化style的函数

- 首先提取content和style图片的特征

- 然后初始化需要生成的图片,这张图片上面需要打开grad

- 设置好hyper,设定好optimizer

- 然后在一定的范围里,用cnn提取现在图片的特征

- 用现在的特征计算loss,然后改变现在的图片