这部分需要在torch和TensorFlow两个framework里面选一个。

PyTorch

What

- 加入了Tensor的object(类似于narray),不需要手动的backprop了

Why

- 在GPU上面跑,不需要CUDA就可以在自己的GPU上面跑NN

- functions很多

- 站在巨人的肩膀上!

- 在实际使用中应该写的深度学习代码

学习资料

- Justin Johnson has made an excellenttutorial for PyTorch.

- DetailedAPI doc

- If you have other questions that are not addressed by the API docs, the PyTorch forum is a much better place to ask than StackOverflow.

整体结构

- 第一部分,准备,使用dataset

- 第二部分,abstraction level1,直接在最底层的Tensors上面操作

- 第三部分,abstraction level2,

nn.Module定义一个任意的NN结构 - 第四部分,abstraction level3,

nn.Sequential,定义一个简单的线性feed - back网络 - 第五部分,自己调参,尽量让CIFAR - 10的精度尽可能高

Part 1.Preparation

pytorch里面有下载dataset,预处理并且迭代成minibatch的功能

import torchvision.transforms as T

- 这个包包括了预处理以及增强data的功能,在这里选择了减去平均的RGB并且除以标准差

- 然后对不同的部分分别构建了一个dataset object(训练,测试,val),这个dataset会载入一次training example,并且在DataLoader部分构建minibatch

1 | NUM_TRAIN = 49000 |

- 需要一个是否使用GPU的flag,并且set到true。在这个作业里面不是必须用GPU跑,但是如果电脑不能enableCUDA的话,就会自动返回CPU模式。

- 除此之外,建立了两个global var,dtype代表float32,device代表用哪个

- 因为mac本身不支持CUDA,而且好像新版本的系统还不能安装N卡的部分,所以现在用的CPU

1 | USE_GPU = True |

Part2 Barebones PyTorch

- 虽然有很多高层的API已经有了很多功能,但是这部分从比较底层的部分来进行

- 建立一个简单的fc - relu net,两个中间层,没有bias

- 用Tensor的method来计算forward,并且用自带的autograd来计算back

- 如果设定了

requires_grad = True,那么在计算的时候不仅会计算值,还会生成计算back的graph - if x is a Tensor with

x.requires_grad == Truethen after backpropagation x.grad will be another Tensor holding the gradient of x with respect to the scalar loss at the end

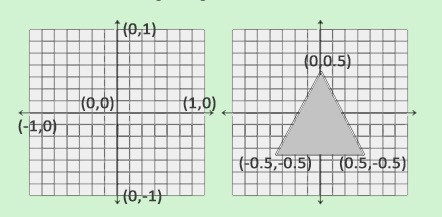

PyTorch Tensors: Flatten Function

- Tensors是一个和narray很像的东西,定义了很多比较好用的功能,比如

flatten来reshape image data - 在Tensor里面一个图片的形状是NxCxHxW

- datapoint的数量

- channels

- feature map的H和W

- 但是在affine里面我们希望一个datapoint可以表现成一个单独的vector,而不是channel和宽和高

- 所以在这里用flatten来首先读取NCHW的数据,然后返回这个data的view(相当于array里面的reshape,把它改成了Nx??,其中??可以是任何值)

1 |

|

Barebones PyTorch: Two-Layer Network

当定义一个 two_layer_fc的时候,会有两层的中间带relu的forward,在写好了forward之后需要确保输出的形状是对的并且没有什么问题(最近好像对这个大小已经没有什么疑问了)

1 | import torch.nn.functional as F # useful stateless functions |

Barebones PyTorch: Three-Layer ConvNet

- 上下这两个都是,在测试的时候可以直接pass 0来测试tensor的大小是不是对的

- 网络的结构

- conv with bias,channel_1 filters,KW1xKH1,2 zero - padding

- RELU

- conv with bias,channel_2 filters,KW2xKH2,1 zero - padding

- RELU

- fc with bias,输出C class

- 注意!在这里fc之后没有softmax的激活层,因为在后面计算loss的时候会提供softmax,计算起来更加有效率

- 注意2!在conv2d之前不需要flatten,在fc之前才需要flatten

1 |

|

Barebones PyTorch: Initialization

random_weight(shape)initializes a weight tensor with the Kaiming normalization method. -> 使用了KAIMING normalzero_weight(shape)initializes a weight tensor with all zeros. Useful for instantiating bias parameters.

1 |

|

Barebones PyTorch: Check Accuracy

- 在这部分不需要计算grad,所以要关上

torch.no_grad()避免浪费 - 输入

- 一个DataLoader来给我们想要check的data分块

- 一个表示模型到底是什么样子的model_fn,来计算预测的scores

- 这个model需要的参数

- 没有返回值但是会print出来acc

1 |

|

BareBones PyTorch: Training Loop

- 用stochastic gradient descent without momentum来train,并且用

torch.functional.cross_entropy来计算loss - 输入

- model_fc

- params

- learning_rate

- 没有输出

- 进行的操作

- 把data移动到GPU或者CPU

- 计算score和loss

- loss.backward()

- update params,这部分不需要计算grad

BareBones PyTorch: Training a ConvNet

- 需要网络

- Convolutional layer(with bias) with 32 5x5 filters, with zero - padding of 2

- ReLU

- Convolutional layer(with bias) with 16 3x3 filters, with zero - padding of 1

- ReLU

- Fully - connected layer(with bias) to compute scores for 10 classes

- 需要自己初始化参数,不需要tune hypers

- 注意1:fc的w的大小是D,C,跟数据无关需要从上一层的输出求

- conv之后的图片大小从32-> 30

1 | learning_rate = 3e-3 |

Part3 PyTorch Module API

- 上面的所有过程是手算来track整个过程的,但是在更大的net里面就没有什么用了

nn.Module来定义网络,并且可以选optmi的方法

Subclass

nn.Module. Give your network class an intuitive name likeTwoLayerFC.__init__()里面定义自己需要的所有层.nn.Linearandnn.Conv2d都在模块里自带了.nn.Modulewill track these internal parameters for you. Refer to the doc to learn more about the dozens of builtin layers. Warning: don’t forget to call thesuper().__init__()first!(调用父类)In the

forward()method, define the connectivity of your network. 直接用init里面初始化好的方法来forward,不要再forward里面增加新的方法

用上面的方法来写一个三层的layer

- 注意需要初始化w和b的参数,用kaiming的方法

1 | class ThreeLayerConvNet(nn.Module): |

Module API: Check Accuracy

- 不用手动pass参数了,直接就可以得到整个net的acc

Module API: Training Loop

- 用optimizer这个object来update weights

- 输入

- model

- optimizer

- epoch,可选

- 没有return,但是会打印出来training时候的acc

其实就是设置好model和optimizer就可以了

Part4 PyTorch Sequential API

nn.Sequential没有上面的灵活,但是可以集成上面的一串功能- 需要提前定义一个在forward里面能用的flatten

1 | # We need to wrap `flatten` function in a module in order to stack it |

实现三层,注意需要初始化参数

1 | def xavier_normal(shape): |

Part5 来训练CIFAR-10吧!

自己找net的结构,hyper,loss,optimizers来把CIFAR-10的val_acc在10个epoch之内升到70%以上!

- Layers in torch.nn package: http://pytorch.org/docs/stable/nn.html

- Activations: http://pytorch.org/docs/stable/nn.html#non-linear-activations

- Loss functions: http://pytorch.org/docs/stable/nn.html#loss-functions

- Optimizers: http://pytorch.org/docs/stable/optim.html

一些可能的方法:

- Filter size: Above we used 5x5; would smaller filters be more efficient?

- Number of filters: Above we used 32 filters. Do more or fewer do better?

- Pooling vs Strided Convolution: Do you use max pooling or just stride convolutions?

- Batch normalization: Try adding spatial batch normalization after convolution layers and vanilla batch normalization after affine layers. Do your networks train faster?

- Network architecture: The network above has two layers of trainable parameters. Can you do better with a deep network? Good architectures to try include:

- [conv-relu-pool]xN -> [affine]xM -> [softmax or SVM]

- [conv-relu-conv-relu-pool]xN -> [affine]xM -> [softmax or SVM]

- [batchnorm-relu-conv]xN -> [affine]xM -> [softmax or SVM]

- Global Average Pooling: Instead of flattening and then having multiple affine layers, perform convolutions until your image gets small (7x7 or so) and then perform an average pooling operation to get to a 1x1 image picture (1, 1 , Filter#), which is then reshaped into a (Filter#) vector. This is used in Google’s Inception Network (See Table 1 for their architecture).

- Regularization: Add l2 weight regularization, or perhaps use Dropout.

一些tips:

- 应该会在几百个iter里面就看到进步,如果params work well

- tune hyper的时候从一大片range和小的train开始,找到好一些的之后再围绕这个范围找(多训一点)

- 在找hyper的时候应该用val set

1 | model = None |

- 第四层conv试过ksize=1,效果不是很好

- BN好像效果很好

- maxpool多一些,计算负担少而且效果好像比较好

- 最终val_acc在77-79左右,test_acc = 76.22